Earth Observation: Remote sensing and systems

- December 11, 2020

- Carlo De Michele

- Tutorial Students, Uncategorized

- No Comments

For almost 150 years people have recognised the advantages of getting a little distance between them and what they are studying. This is a technique we call ‘remote sensing’. “Remote Sensing” means that a measurement is taken remotely, without physical contact. The first people to do remote sensing were balloonists in the 1840s. They floated up cameras to take pictures of the ground below them. Now, technology has advanced so far that we observe the Earth (a field of study called Earth Observation) using satellites to take remote measurements of the Earth’s surface.

Earth Observation Satellites

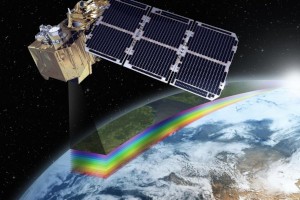

These satellites have sensors installed onboard. The Earth observation satellites travel in low orbits (between 600 and 1000 km away – roughly the distance between Sydney and Brisbane). In this low orbit, it takes a satellite about 100 minutes for a complete revolution of the Earth. Some satellites do meteorological (weather) observation and they make these observations in geostationary orbits (GEO, 35,000 km above Earth). The satellites always aim to record data from the same portion of the Earth’s surface.

The Earth observation satellites have two types of sensors. These sensors can be the “passive” type (they measure the reflection of solar radiation from the Earth’s surface) or the “active” type, such as radar sensors, equipped with their own energy source, emitting microwave pulses, the echo of which is recorded by the sensor. Passive sensors were the first put onboard commercial satellites in the early 1970s. These passive sensors are very popular for mapping and monitoring vegetation.

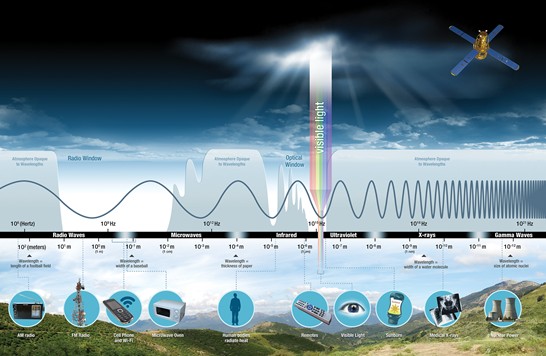

To understand how ‘passive’ sensors work we need to recall some basics of physics and energy — specifically the concept of the ‘electromagnetic spectrum’—EMS for short. When you send a text message, take a picture for your Instagram, or pop popcorn in a microwave oven, you are using electromagnetic energy. Electromagnetic energy travels in waves. These waves span range from very long radio waves to very short gamma rays (see Figure 2). The human eye can only detect only a small portion of this spectrum called visible light. A radio detects a different portion of the spectrum, and an x-ray machine uses yet another portion (that our eyes cannot see).

What is the Electromagnetic Spectrum?

Light waves (e.g. light Earth receives from the Sun, radio waves, microwaves) across the electromagnetic spectrum behave in similar ways. When a light wave encounters an object (e.g. a rock, a leaf, a tree, a lake), that light wave is either transmitted, reflected, absorbed, refracted, polarized, diffracted, or scattered. What happens depends on the composition of the object and the wavelength of the light. Short wavelengths (of the violet light wave) are more easily scattered than longer red light waves. That phenomenon is why we see a red sunset in a city with lots of pollution from smog.

How does a remote sensor work?

Sensors on satellites measure the radiation reflected (and emitted) from the Earth’s surface. This measurement occurs in intervals of the electromagnetic spectrum (called spectral bands). The ability to distinguish between different types of surfaces (soil, vegetation, water) is based on their spectral response. This ability depends on the ‘width’ and location of bands within the EMS (e.g. if the bands are designed to capture energy in the blue, green and red regions of the spectrum) in which the sensor performs the measurements,

Our eyes can only see visible light. But, light comes in many other “colors”— infrared, ultraviolet, and gamma-ray—that are invisible to the naked eye. Satellites often ‘see’ the Earth beyond the visual light (blue, yellows, green, red, etc), delivering natural color images. But, they also carry sensors that can detect other wavelengths, most commonly near infrared and short-wave infrared. Sometimes, they also detect thermal bands that give information on temperatures.

Sensors have different characteristics depending on the spectral region they explore. In general, they detect electromagnetic radiation from small portions of terrestrial (Earth’s land) surface. The dimensions of the land define the spatial resolution of the image (generated both by the movement of the sensor and the satellite along the orbit). The geographic location is noted, and a number is assigned that corresponds to the amount of radiation measured. The digital image they take can be processed using specific software capable of managing the large amount of data detected by the satellite sensor.

Copernicus: a Sentinel from space for Earth Observation

Copernicus is an Earth observation (EO) program of the European Union in partnership with the European Space Agency, the EU Member States and EU Agencies. The Copernicus project wants to achieve a global, continuous, high quality and wide range Earth observation capacity. The Copernicus program uses a constellation of satellites —Sentinels— that carry a range of technologies, such as radar and multi-spectral imaging instruments for land monitoring. Sentinel-2A was launched in 2015, followed by Sentinel-2B in 2017; together they orbit the Earth at an altitude of 876 km, and with a swath width of 290 km they cover all land surfaces, large islands, inland and coastal waters every 3-5 days.

The Sentinel-2 satellites carry a high-resolution multispectral imager with 13 spectral bands that cover the visible and infrared regions of the spectrum and enables mapping ground objects as small as 10 x 10 meters.

Want to know more?

Check out our blog series to find out more about –